I like skirts. I hope one day men are able to wear them without being judged by the square minds out there. Even miniskirts. I think the Wimbledon tournament should require male players to wear white miniskirts, it would bring us to a new level of tennis. It was equally great when women liberated from the skirt and got to wear pants last Century.

But we will be talking about a different type of skirt. Here is the story.

When generation algorithms run in parallel you have to deal with multiple world chunks at the same time. You can think of a chess board and imagine all black squares are generated at once. You could put anything you want in these squares and it would be alright, you would never get discontinuities along the edges because black squares never share edges.

Now comes the time where you need to generate the white squares. At this point you need to think about the edges, make sure anything you place in the white square will connect properly with the adjacent black square. You have two options here:

- You remember what was in the black squares.

- Your generation algorithm must be able to produce content "locally", that is the value obtained for one point does not depend on the neighboring points

In most cases we opt for (2). This is how noise functions like Perlin's and Worley's work. This is also how Wang Tiles and derivative methods work. Once your generation function is "local", it does not really matter in which order you generate your chunks. They will always line up correctly along the edges. This choice of (2) may seem a no-brainer at this point, but we will come back to this decision later.

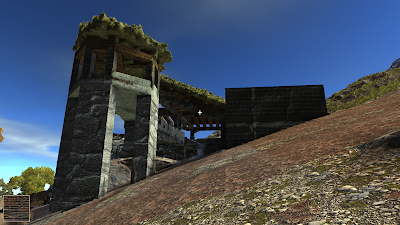

Now, if instead of a checkerboard arrangement you have multiple levels of detail next to each other (a clipmap), you soon run into a problem. Running the same local function at different resolutions creates discontinuities. They will appear as holes in the resulting world mesh. The following screenshot shows some of them:

The clean, nice solution for this is to create a thin mesh that connects both levels of detail. This is usually called a "seam". This is not difficult for 2D clipmaps. For a full 3D clipmap it can get a bit messy.

In general your way out if this is always to extend the same algorithm you use for meshing. For instance if you are using marching cubes, you will need a modified marching cubes that runs at one resolution on one end, and at a different resolution on the other end. This is exactly what the guys in the C4 engine have done with their Transvoxel algortithm:

http://www.terathon.com/voxels/

In my case I chose not to use seams in the beginning at all, but a different technique called skirts. This is a technique that was often applied to 2D clipmaps as well. The idea is to create a thin mesh that is perpendicular to the edge where the discontinuity appears. While this would not connect to the neighboring cell, it does hide the holes you get just like the seams.

Just like seams, skirts in 3D clipmaps are kind of complicated as well. Imagine you are doing a thin vertical column. You need to make sure the skirts go into the right angle and never go too far. You don't want these skirts protruding out of the other side of your mesh.

Skirts have a big problem. Since the vertices in the skirt mesh do not connect to the other end of the edge, you will have some polygons overlapping on screen. This can produce z-fighting at render time. This is not a big deal, you can always shift the skirts in the Z-buffer and make sure they will never fight with the main geometry in your clipmap cells. But this works only if the geometry is opaque. If you are rendering water or glass, skirts make rendering transparent meshes a lot more difficult.

Still skirts have a massive advantage over seams. In order to produce seams you must evaluate the same function at two different resolutions for adjacent cells. If your function has a time penalty per cell, let's say you need to load some data, or access some generation cache, you will be paying this penalty twice for every cell that has a seam. You pay it once when you generate the contents of the cell, then again when you generate the seam.

A properly generated seam creates a serial link between two neighboring cells. For a system you want to be massively parallel, any serial elements come to a price. There is no way around this, you either pay the price in processing time or in memory (where you cache the results of earlier processing). Skirts, on the other hand, can be computed with no knowledge of neighboring cells. They are inherently parallel.

Back to the checkerboard example, even if you chose option (2), when you are doing seams you will be forced to look into the black squares when you are generating the white ones. Skirts have yet another advantage. Nothing is really forcing you to use the same function from one square to the next. Even if the function has discontinuities the skirts will mask them. You may think this never happens, and that is true while you are using simpler local functions like perlin noises or tilesets. But at some point you may be generating something that your standard seaming cannot mend, it just takes for the generation function to produce slightly different results for different levels of detail.

Anyway in my case it was time to get properly connecting seams. They would be nice for water, ice crystals, glass and other transparent materials in the world.

I run the dual contouring mesh generation over the seam space. Like in the transvoxel algorithm, one side of the voxels have double the resolution than the other side. Instead of going back and generating portions of the neighboring cells, I just store their boundaries. So there is a little bit of option (1) in the checkerboard example. It adds some memory overhead but it is worth the save in processing time.

Here you can see some results in wireframe:

The seams appear in yellow.

I am actually not finished with this yet. Still need to bring the right materials and normals into the seams. But I would say the hard part is over.

.jpg)